Examples#

General-purpose and introductory examples for the imbalanced-learn toolbox.

Examples showing API imbalanced-learn usage#

Examples that show some details regarding the API of imbalanced-learn.

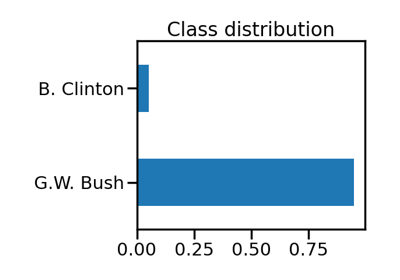

Examples based on real world datasets#

Examples which use real-word dataset.

Customized sampler to implement an outlier rejections estimator

Benchmark over-sampling methods in a face recognition task

Porto Seguro: balancing samples in mini-batches with Keras

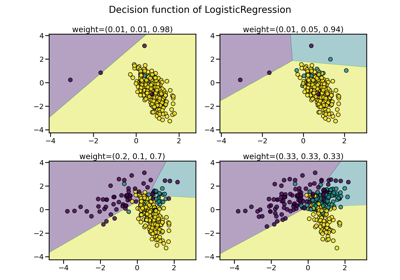

Fitting model on imbalanced datasets and how to fight bias

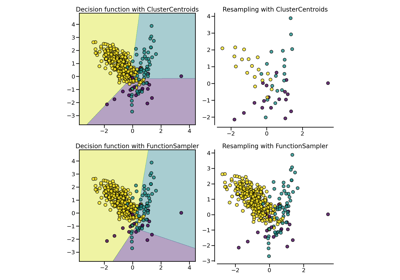

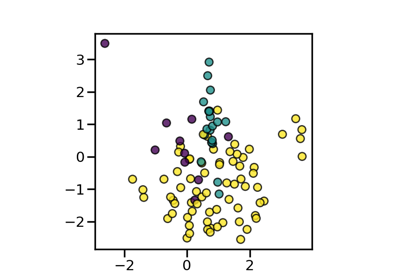

Examples using combine class methods#

Combine methods mixed over- and under-sampling methods. Generally SMOTE is used for over-sampling while some cleaning methods (i.e., ENN and Tomek links) are used to under-sample.

Compare sampler combining over- and under-sampling

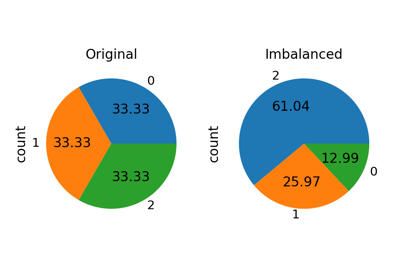

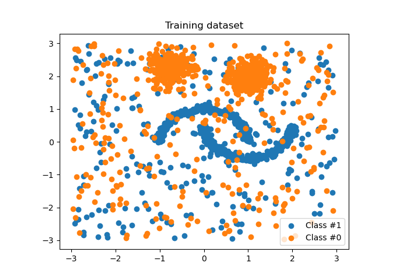

Dataset examples#

Examples concerning the imblearn.datasets module.

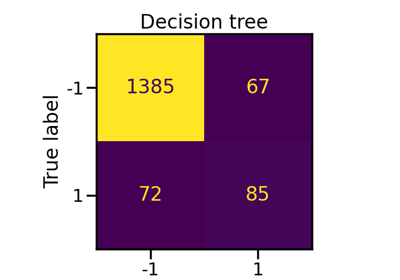

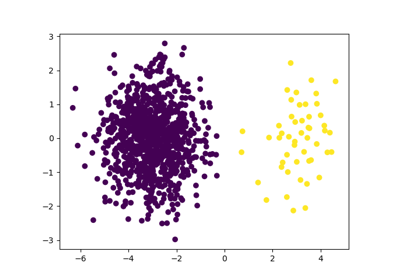

Example using ensemble class methods#

Under-sampling methods implies that samples of the majority class are lost during the balancing procedure. Ensemble methods offer an alternative to use most of the samples. In fact, an ensemble of balanced sets is created and used to later train any classifier.

Evaluation examples#

Examples illustrating how classification using imbalanced dataset can be done.

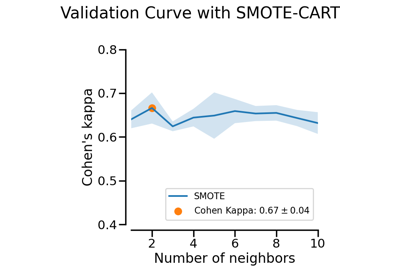

Model Selection#

Examples related to the selection of balancing methods.

Distribute hard-to-classify datapoints over CV folds

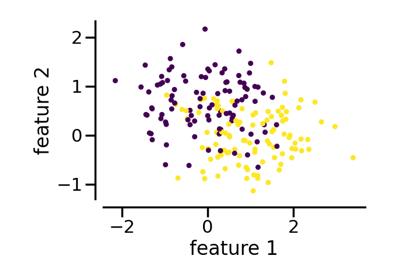

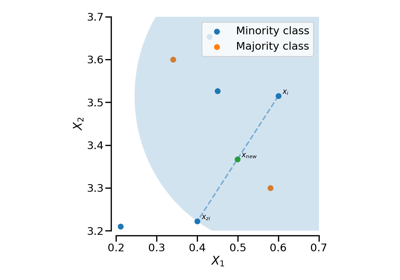

Example using over-sampling class methods#

Data balancing can be performed by over-sampling such that new samples are generated in the minority class to reach a given balancing ratio.

Effect of the shrinkage factor in random over-sampling

Pipeline examples#

Example of how to use the a pipeline to include under-sampling with scikit-learn estimators.

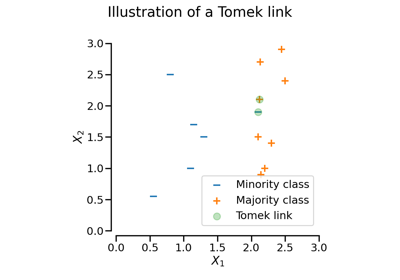

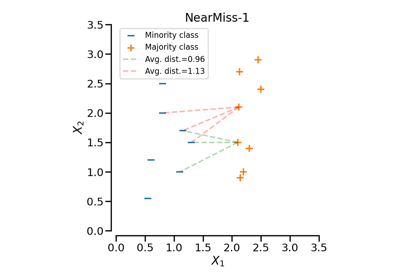

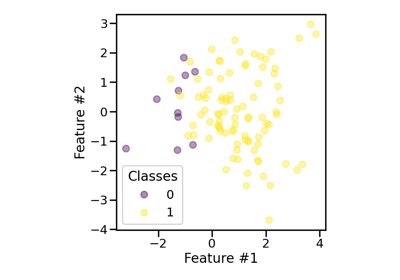

Example using under-sampling class methods#

Under-sampling refers to the process of reducing the number of samples in the majority classes. The implemented methods can be categorized into 2 groups: (i) fixed under-sampling and (ii) cleaning under-sampling.